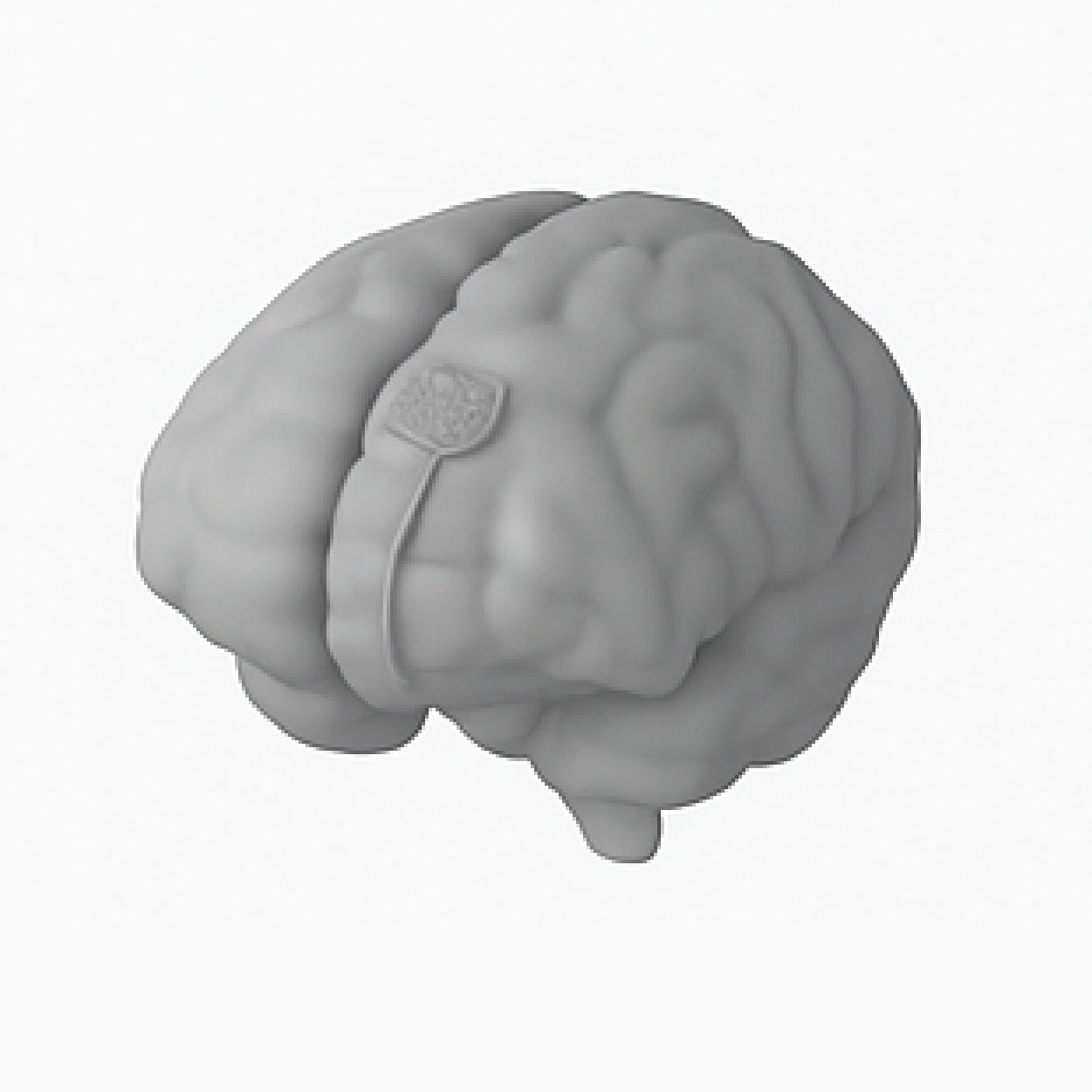

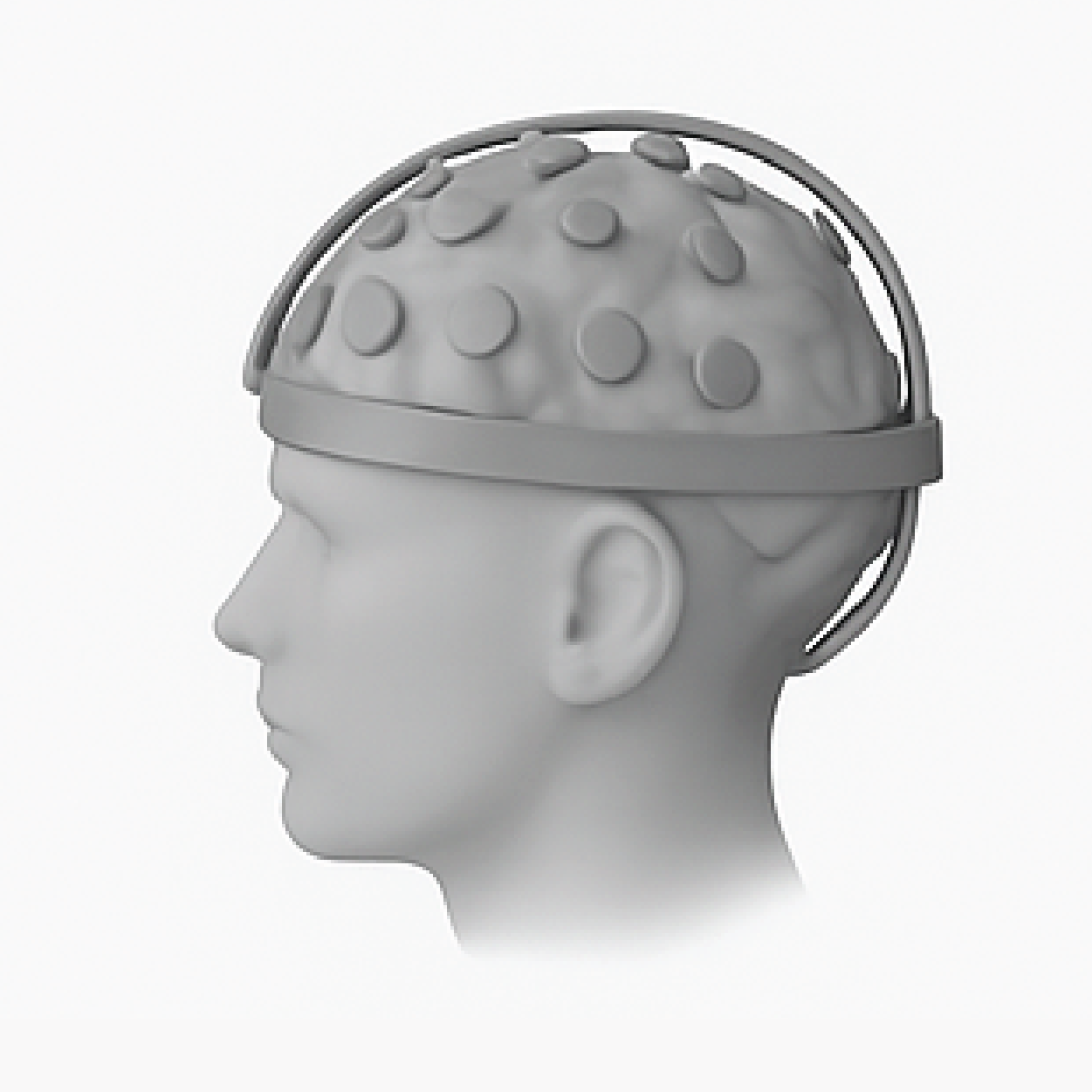

Our brains and bodies speak a rich and complex biological language of neural and physiological

signals, a language that AI models are increasingly capable of deciphering as large-scale

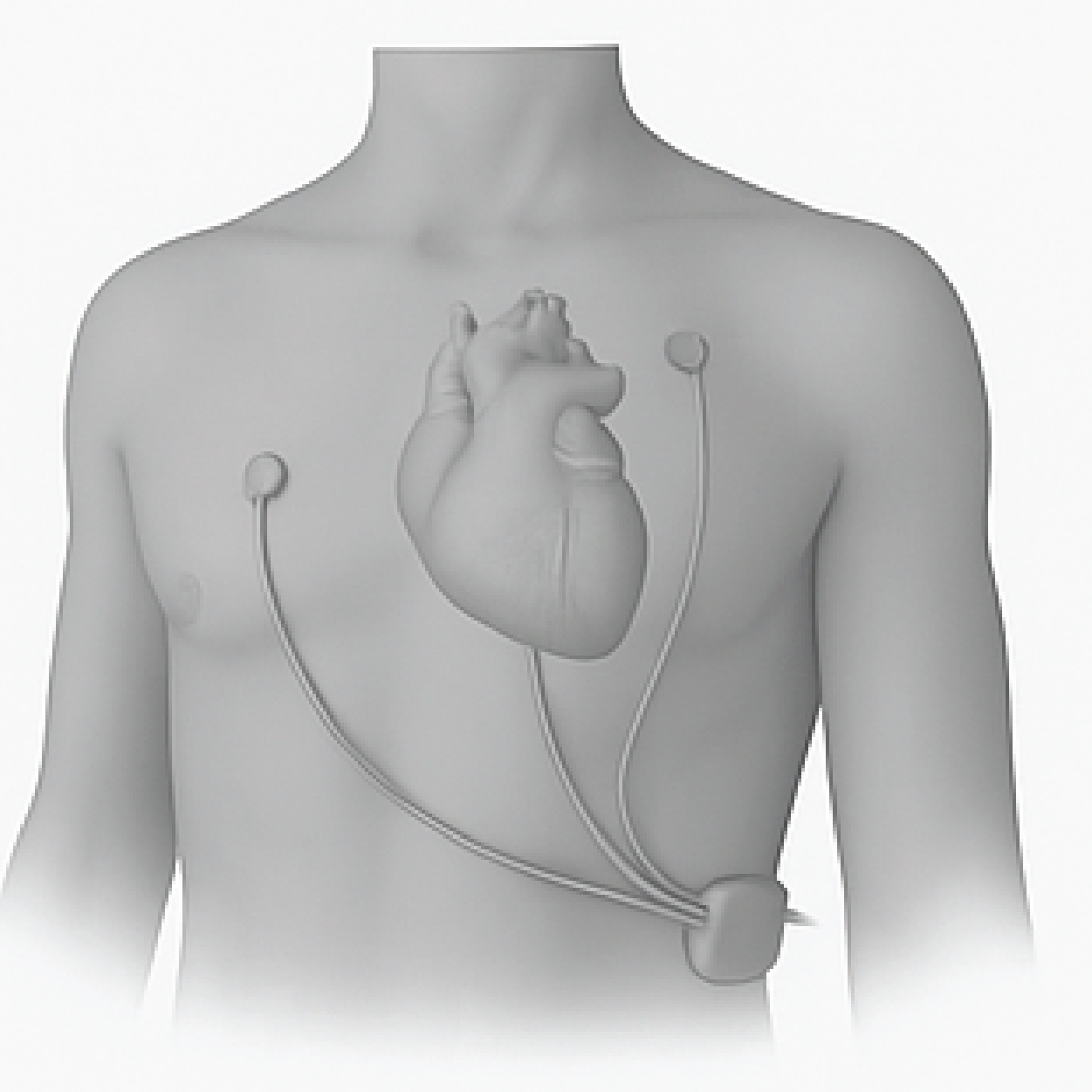

datasets become available. Recent advances in brain interfacing and wearable technologies,

including EEG, intracortical electrophysiology, EMG, MEG, and ECG, have enabled the broad

collection of these signals across real-world contexts and diverse populations. This growing

wealth of data is driving a shift toward foundation models: large-scale, pretrained AI systems

designed to learn from biosignals and generalize across diverse downstream applications, from

brain-computer interfacing to health monitoring.

Realizing this potential, however, requires addressing the unique challenges that come with

biosignal timeseries: they are noisy, heterogeneous, and collected under variable conditions

across subjects, devices, and environments. To meet these challenges, this workshop brings

together neuroscientists, biomedical engineers, wearable tech researchers, and machine learning

experts advancing foundation model approaches. Through interdisciplinary dialogue, we aim to

catalyze the next generation of AI models that can capture the complexity of the brain, body,

and behavior at scale.